目录索引

译文

回到在本章开头创建的着色器 USB_shadow_map,在这一小节中,我们将定义一个用于在模型上接收阴影的纹理。为了实现该操作,让我们在颜色 pass 的 Tag 语义块中加入光照模型 LightMode,并将其赋为 ForwardBase,这样 Unity才能理解该 pass 是如何被光照影响的。

// default color Pass

Pass

{

Name "Shadow Map Texture"

Tags

{

"RenderType"="Opaque"

"LightMode"="ForwardBase"

}

...

}由于阴影纹理是投射在 UV 坐标上的,因此我们必须声明一个 sampler2D 变量。同样,我们也必须包含对纹理进行采样的坐标。这一过程将在片元着色器阶段进行,因为必须逐像素地计算投影。

// 默认的颜色 Pass

Pass

{

Name "Shadow Map Texture"

Tags

{

"RenderType"="Opaque"

"LightMode"="ForwardBase"

}

CGPROGRAM

…

struct v2f

{

float2 uv : TEXCOORD0;

UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

// 声明阴影贴图的UV坐标

float4 shadowCoord : TEXCOORD1;

};

sampler2D _MainTex;

float4 _MainTex_ST;

// 声明阴影贴图的采样器

sampler2D _ShadowMapTexture;

…

ENDCG

}

值得一提的是,纹理 _ShadowMapTexture 将仅存在于着色器程序中,因此它不需要声明在着色器的属性(property)语义块中,我们也不需要在检查器中给纹理赋任何值。相反,我们只需要创建一个作用与纹理一致的投影。

所以,我们应该如何在着色器中创建纹理投影呢?

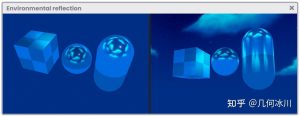

为了实现这个操作,我们需要理解投影矩阵 UNITY_MATRIX_P 是如何工作的。该矩阵允许我们将坐标空间从观察空间变换到裁剪空间同时对屏幕上的模型进行裁剪。投影矩阵具有第四个分量 W,定义了投影的齐次坐标。

Orthographic projection space

W = -((Z_far + Z_near) / (Z_far – Z_near))

Perspective projection space

W = -(2 * Z_far * Z_near / (Z_far – Z_near))

我们在第 1.1.6 小节中提及过 UNITY_MATRIX_P 矩阵定义了模型的顶点相对于相机视锥体的位置。经过该矩阵的处理(在 UnityObjectToClipPos 函数中执行),得到一个被称为标准化设备坐标(Normalized Device Coordinates,NDC)的坐标空间。该坐标具有 [-1, 1] 的范围,并且是通过将 XYZ 三个坐标的值除以 W 的值得到的,如下所示:

projection.X / projection.W projection.Y / projection.W projection.Z / projection.W

![图片[1]-《Unity着色器圣经》8.0.3 | 阴影贴图-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/05/image-102-1024x395.jpeg)

我们有必要了解这一过程,要我我们需要在着色器中使用 tex2D 函数定位阴影贴图。我们已经了解过该函数需要两个参数,第一个代表纹理本身(在这个场景中我们传入前面声明的 _ShadowMapTexture),第二个代表纹理的 UV 坐标。在投影的例子中,我们需要将 NDC 坐标转换成 UV 坐标,应该怎么做呢?我们需要记住的一点是:UV 坐标的范围是 [0.0f, 1.0f],而 NDC 坐标的范围是 [-1.0f, 1.0f]。因此,从 NDC 变换到 UV,我们可以用下面的方法:

NDC = [-1, 1] + 1

NDC = [0, 2] 2

NDC = [0, 1]

把它提炼成等式就是:

(NDC + 1) / 2

现在,等式里的 NDC 值是什么?我们前面已经提到过,NDC的值是通过将 XYZ 三个坐标的值除以 W 的值得到的。

因此,我们可以推断出,NDC 的 X 坐标等于 X 分量除以 W 分量,Y 坐标等于 Y 分量除以 W 分量。

NDC.x = projection.X / projection.W

NDC.y = projection.Y / projection.W

我们之所以提到这个概念是因为在 Cg 或 HLSL 中,UV 坐标的值是通过 XY 分量获取的。这是什么意思呢?

假设我们的输入结构体中有 float2 uv : TEXCOORD0,那么我们就可以通过 uv.x 访问 U 坐标、通过 uv.y 访问 V 坐标。然而,在这个例子中,我们需要把 NDC 坐标变换到 UV 坐标,因此回到上一步操作中,UV 坐标应为:

U = ((NDC.x / NDX.w) + 1) * 0.5

V = ((NDC.y / NDX.w) + 1) * 0.5

原文对照

Continuing with the shader USB_shadow_map; in this section, we will define a texture to be able to receive shadows on our object. To do this we have to include the LightMode in the color pass and make it equal to ForwardBase, this way Unity will know that this pass is affected by lighting.

// default color Pass

Pass

{

Name "Shadow Map Texture"

Tags

{

"RenderType"="Opaque"

"LightMode"="ForwardBase"

}

...

}Since these types of shadows correspond to a texture that is projected on UV coordinates, we have to declare a sampler2D variable. Likewise, we have to include coordinates to sample the texture. This process will be carried out in the fragment shader stage because the projection must be calculated per-pixel.

// default color Pass

Pass

{

Name "Shadow Map Texture"

Tags

{

"RenderType"="Opaque"

"LightMode"="ForwardBase"

}

CGPROGRAM

…

struct v2f

{

float2 uv : TEXCOORD0;

UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

// declare the UV coordinates for the shadow map

float4 shadowCoord : TEXCOORD1;

};

sampler2D _MainTex;

float4 _MainTex_ST;

// declare a sampler for the shadow map

sampler2D _ShadowMapTexture;

…

ENDCG

}

It is worth mentioning that the texture _ShadowMapTexture will only exist within the program, therefore, it should not be declared as “property” in our shader properties, nor will we pass any texture dynamically from the Inspector, instead, we will generate a projection which will work as a texture.

So, how do we generate a texture projection in our shader?

To do this, we must understand how the UNITY_MATRIX_P projection matrix works. This matrix allows us to go from view-space to clip-space, which generates a clipping of the objects on the screen. Given its nature, it has a fourth coordinate that we have already talked about above, this refers to W, which; in this case, defines the homogeneous coordinates that allow such projection.

Orthographic projection space

W = -((Z_far + Z_near) / (Z_far – Z_near))

Perspective projection space

W = -(2 * Z_far * Z_near / (Z_far – Z_near))

As we mentioned in section 1.1.6, UNITY_MATRIX_P defines the vertex position of our object in relation to the frustum of the camera. The result of this operation, which is carried out within the UnityObjectToClipPos function, generates space coordinates called Normalized Device Coordinates (NDC). These types of coordinates have a range between minus one and one [-1, 1], and are generated by dividing the XYZ axes by the W component as follows:

projection.X / projection.W projection.Y / projection.W projection.Z / projection.W

![图片[1]-《Unity着色器圣经》8.0.3 | 阴影贴图-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/05/image-102-1024x395.jpeg)

It is essential to understand this process because we have to use the tex2D function to position the shadow texture in our shader. This function, as we already know, asks us for two arguments: The first refers to the texture itself; to the texture _ShadowMapTexture that we declared above, and the second refers to the texture’s UV coordinates, however, in the case of a projection, we have to transform normalized device coordinates to UV coordinates, how do we do this? For this we must remember that UV coordinates have a range between zero and one [0.0f, 1.0f] and NDC; as we just mentioned, between minus one and one [-1.0f, 1.0f]. So, to transform from NDC to UV, we will have to perform the following operation:

NDC = [-1, 1] + 1

NDC = [0, 2] 2

NDC = [0, 1]

This operation is summarized in the following equation.

(NDC + 1) / 2

Now, what is the NDC value? As we mentioned earlier, its coordinates are generated by dividing the XYZ axes by their component W.

Consequently, we can deduce that the X coordinate equals the X projection, divided by its W component, and the Y coordinate equals the Y projection, also divided by the W component.

NDC.x = projection.X / projection.W

NDC.y = projection.Y / projection.W

The reason we mention this concept is because, in Cg or HLSL, the UV coordinate values are accessed from the XY components, what does this mean?

Suppose, in the float2 uv : TEXCOORDo input, we can access the U coordinate from the uv.x component, likewise for the V coordinate which would be uv.y, however, in this case, we will have to transform the coordinates from Normalized Device Coordinates to UV coordinates. Therefore, going back to the previous operation, the UV coordinates would obtain the following value:

U = ((NDC.x / NDX.w) + 1) * 0.5

V = ((NDC.y / NDX.w) + 1) * 0.5

![[udemy]在 Unity 中创建 RPG 游戏的终极指南-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/04/TheUltimateGuidetoCreatinganRPGGameinUnity.webp)

![[udemy]学习在 Unity 和 C# 中创建吸血鬼幸存者风格的游戏-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/04/LearnToCreateAVampireSurvivorsStyleGameinUnityC.webp)

![[udemy] 在 Godot 4 中创建完整的 2D 幸存者风格游戏-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/05/CreateaComplete2DSurvivorsStyleGameinGodot4.webp)

![[gamedev tv] RPG核心战斗力的创造者 :学习中级 Unity C# 编码-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/04/RPGCoreCombatCreatorLearnIntermediateUnityCCoding.png)

![[gamedev tv]Unity 2.5D 回合制角色扮演游戏-软件开发学习笔记](https://gamedevfan.cn/wp-content/uploads/2025/05/Unity2.5DTurn-BasedRPG.webp)

暂无评论内容